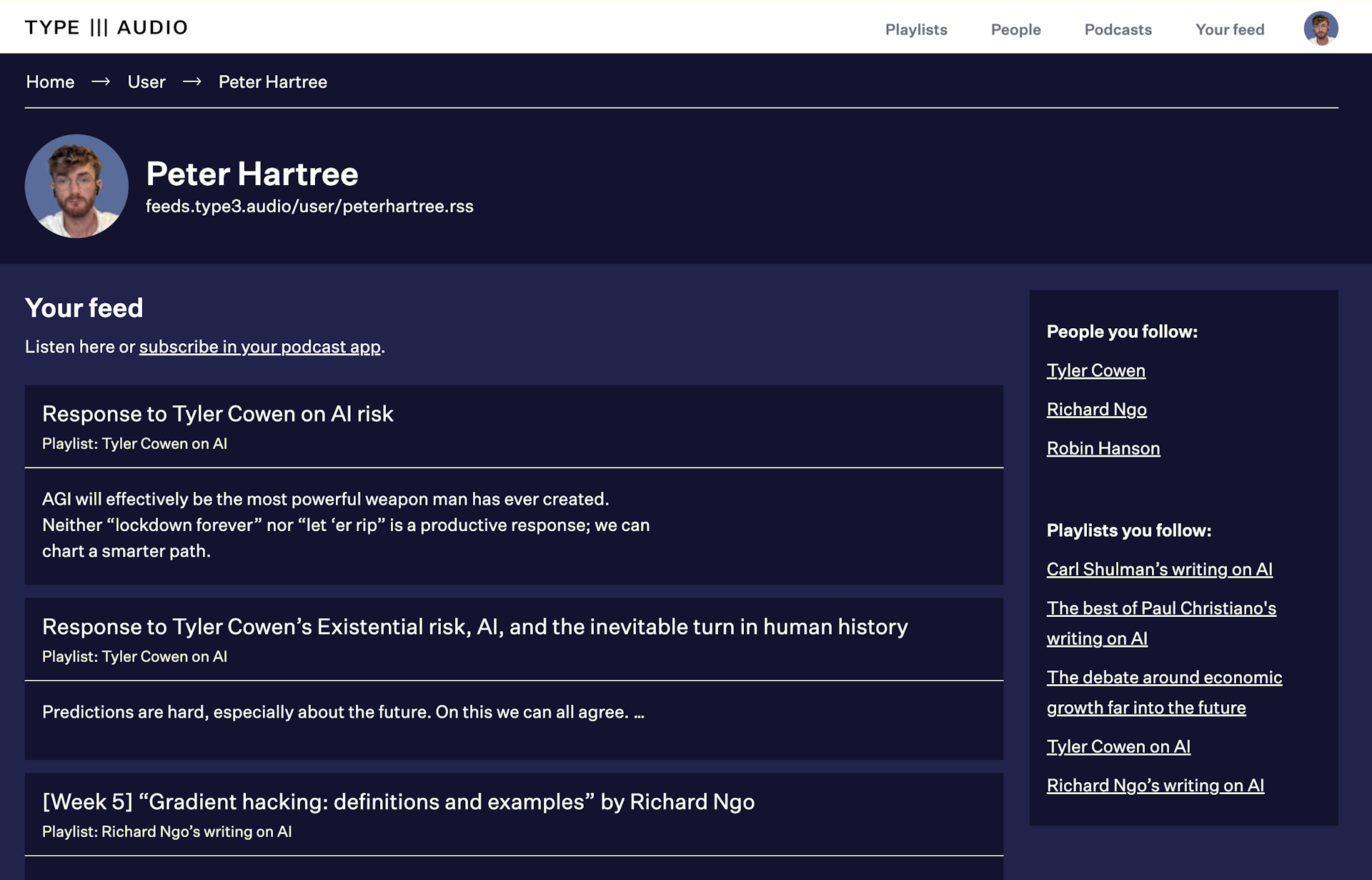

Welcome to the alpha release of TYPE III AUDIO.

Expect very rough edges and very broken stuff—and regular improvements. Please share your thoughts.

[Week 1] "AGI Safety From First Principles" by Richard Ngo

AI Safety Fundamentals: Alignment

AI Safety Fundamentals: Alignment

Readings from the AI Safety Fundamentals: Alignment course.

This report explores the core case for why the development of artificial general intelligence (AGI) might pose an existential threat to humanity. It stems from my dissatisfaction with existing arguments on this topic: early work is less relevant in the context of modern machine learning, while more recent work is scattered and brief. This report aims to fill that gap by providing a detailed investigation into the potential risk from AGI misbehaviour, grounded by our current knowledge of machine learning, and highlighting important uncertain ties. It identifies four key premises, evaluates existing arguments about them, and outlines some novel considerations for each.

Source:

https://drive.google.com/file/d/1uK7NhdSKprQKZnRjU58X7NLA1auXlWHt/view

Narrated for AI Safety Fundamentals by TYPE III AUDIO.