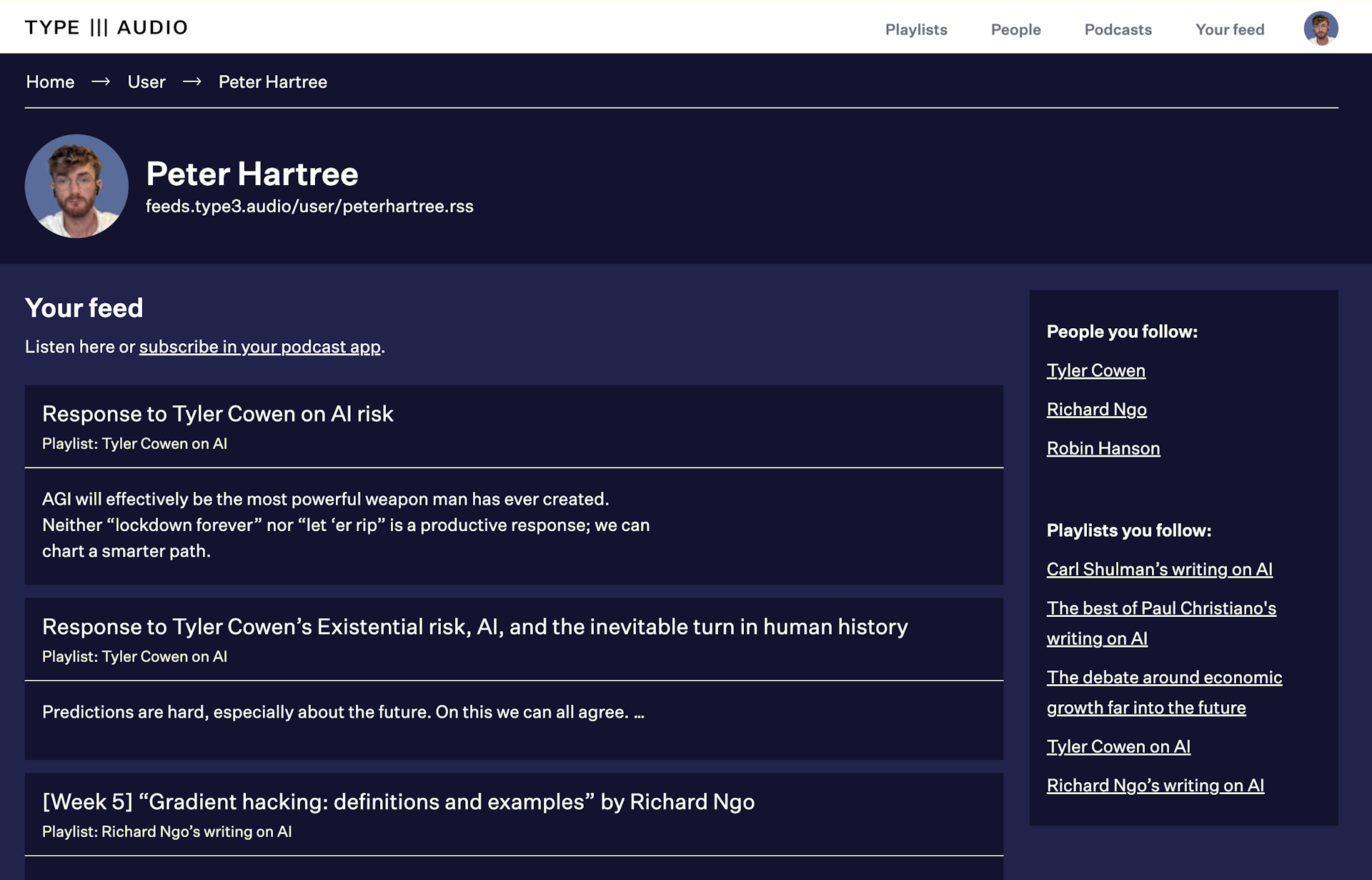

Welcome to the alpha release of TYPE III AUDIO.

Expect very rough edges and very broken stuff—and regular improvements. Please share your thoughts.

[Week 7] "Cooperation, Conflict, and Transformative Artificial Intelligence: Sections 1 & 2 — Introduction, Strategy and Governance" by Jesse Clifton

alignmentforum.org

AI Safety Fundamentals: Alignment

Readings from the AI Safety Fundamentals: Alignment course.

Transformative artificial intelligence (TAI) may be a key factor in the long-run trajectory of civilization. A growing interdisciplinary community has begun to study how the development of TAI can be made safe and beneficial to sentient life (Bostrom 2014; Russell et al., 2015; OpenAI, 2018; Ortega and Maini, 2018; Dafoe, 2018). We present a research agenda for advancing a critical component of this effort: preventing catastrophic failures of cooperation among TAI systems. By cooperation failures we refer to a broad class of potentially-catastrophic inefficiencies in interactions among TAI-enabled actors. These include destructive conflict; coercion; and social dilemmas (Kollock, 1998; Macy and Flache, 2002) which destroy value over extended periods of time. We introduce cooperation failures at greater length in Section 1.1. Karnofsky (2016) defines TAI as ''AI that precipitates a transition comparable to (or more significant than) the agricultural or industrial revolution''. Such systems range from the unified, agent-like systems which are the focus of, e.g., Yudkowsky (2013) and Bostrom (2014), to the "comprehensive AI services’’ envisioned by Drexler (2019), in which humans are assisted by an array of powerful domain-specific AI tools. In our view, the potential consequences of such technology are enough to motivate research into mitigating risks today, despite considerable uncertainty about the timeline to TAI (Grace et al., 2018) and nature of TAI development.

Original text:

https://www.alignmentforum.org/s/p947tK8CoBbdpPtyK/p/KMocAf9jnAKc2jXri

Narrated for AI Safety Fundamentals by Perrin Walker of TYPE III AUDIO.