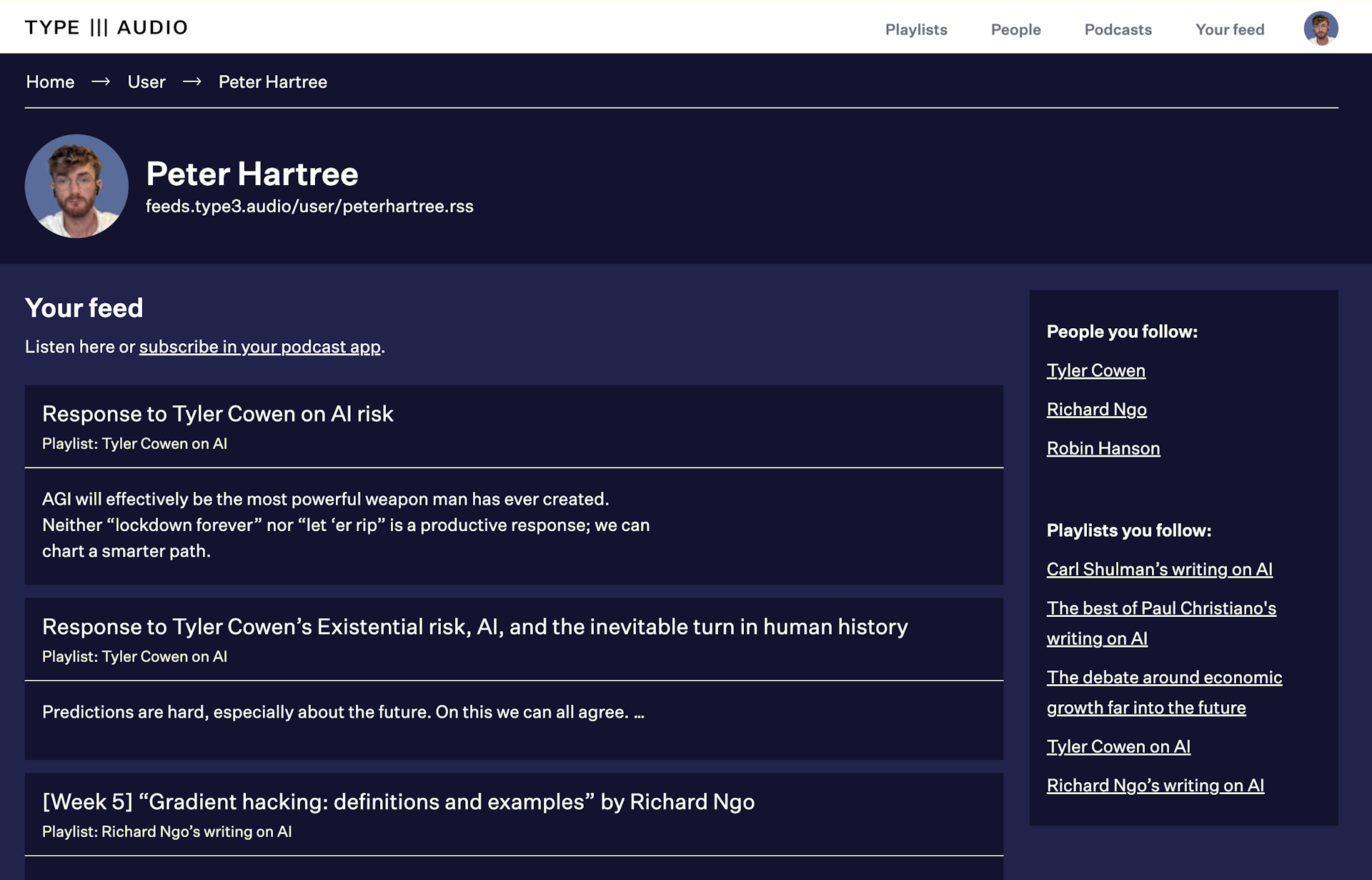

Welcome to the alpha release of TYPE III AUDIO.

Expect very rough edges and very broken stuff—and regular improvements. Please share your thoughts.

Nobody’s on the Ball on AGI Alignment

13 May 2023 · AI Safety Fundamentals: Governance

AI Safety Fundamentals: Governance

Readings from the AI Safety Fundamentals: Governance course.

Subscribe:

Observing from afar, it’s easy to think there’s an abundance of people working on AGI safety. Everyone on your timeline is fretting about AI risk, and it seems like there is a well-funded EA-industrial-complex that has elevated this to their main issue. Maybe you’ve even developed a slight distaste for it all—it reminds you a bit too much of the woke and FDA bureaucrats, and Eliezer seems pretty crazy to you.

That’s what I used to think too, a couple of years ago. Then I got to see things more up close. And here’s the thing: nobody’s actually on the friggin’ ball on this one!

- There’s far fewer people working on it than you might think. There are plausibly 100,000 ML capabilities researchers in the world (30,000 attended ICML alone) vs. 300 alignment researchers in the world, a factor of ~300:1. The scalable alignment team at OpenAI has all of ~7 people.

- Barely anyone is going for the throat of solving the core difficulties of scalable alignment. Many of the people who are working on alignment are doing blue-sky theory, pretty disconnected from actual ML models. Most of the rest are doing work that’s vaguely related, hoping it will somehow be useful, or working on techniques that might work now but predictably fail to work for superhuman systems.

There’s no secret elite SEAL team coming to save the day. This is it. We’re not on track.

Source:

https://www.forourposterity.com/nobodys-on-the-ball-on-agi-alignment/

Narrated for AI Safety Fundamentals by Perrin Walker of TYPE III AUDIO.

---