Carl Shulman

Carl Shulman is a Research Associate at the Future of Humanity Institute, Oxford University, where his work focuses on the long-run impacts of artificial intelligence and biotechnology. He is also an Advisor to the Open Philanthropy Project.

Links:

WebsiteAll the podcast interviews and talks we can find on the internet. It’s “follow on Twitter”, but for audio. New episodes added often.

Curated by Alejandro Ortega.

A selection of writing from papers and the EA Forum

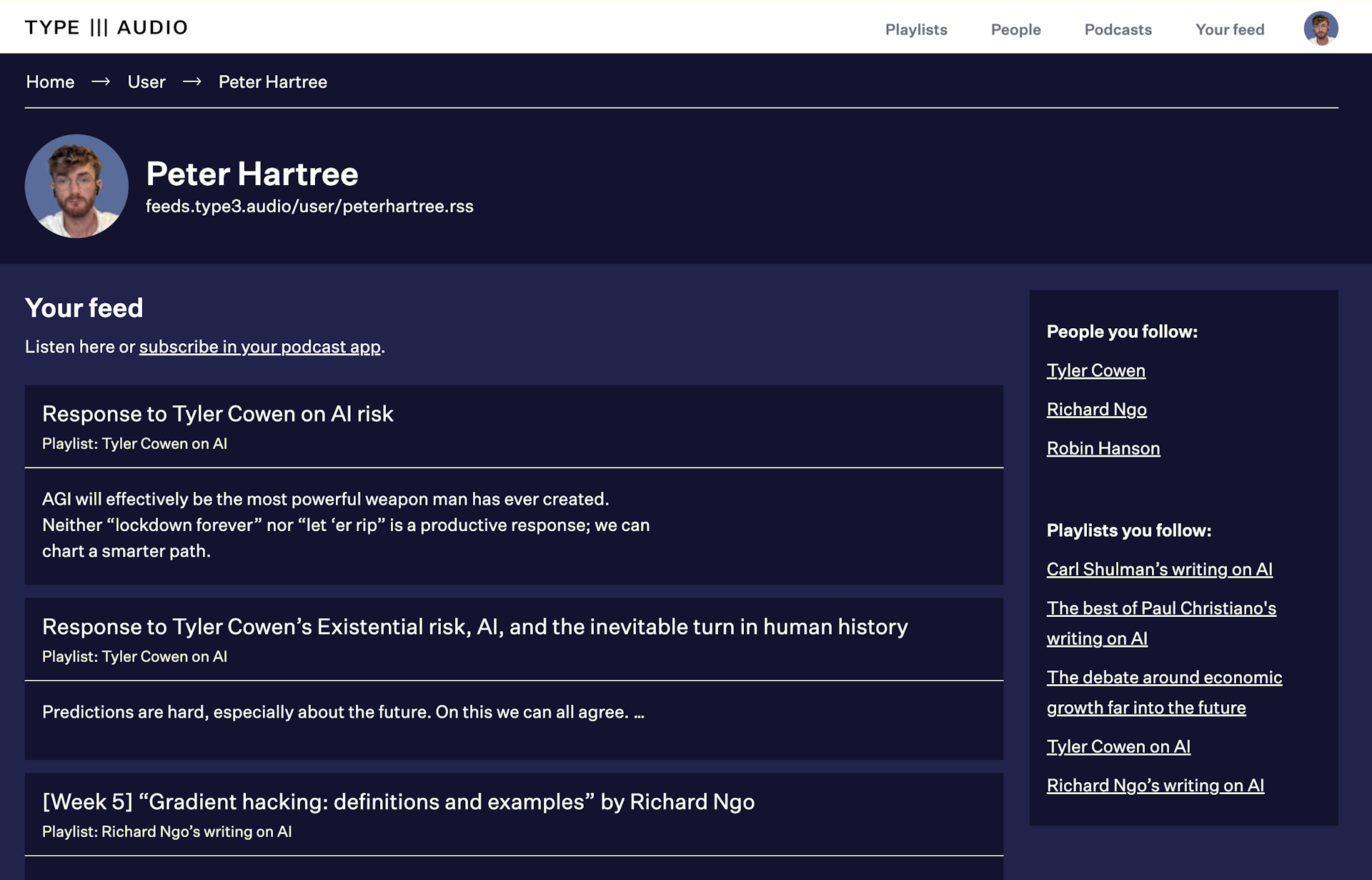

Playlists featuring Carl Shulman, among other writers.